AI Adoption vs. Trust: What Our Data Reveals

Artificial intelligence is becoming part of everyday recruiting operations quickly. What began as experimentation has moved into production. Sourcing tools, screening automation, scheduling assistants, and workflow support are now being explored and implemented across organizations of all sizes.

This shift is real and accelerating.

What has not kept pace is trust.

Skywalk Group’s Employer and Candidate Sentiment Surveys from July 2025 and January 2026 show a widening gap between how often AI is being used and how confident people feel about its role in hiring. That gap matters because it shapes behavior, decision making, and experience, not just opinion.

Employers Are Adopting AI Under Real Pressure

In July 2025, only 18 percent of employers reported using AI in their recruiting process. By January 2026, more than 60 percent reported using AI in some form. That level of growth in six months is significant and unlikely to reverse.

Much of this adoption is driven by practical pressure. Hiring teams are asked to move faster, manage more requisitions, and operate with fewer resources. Automation offers relief. It promises efficiency, consistency, and scale at a time when demand has not slowed.

There is also a human element at play. Leaders across organizations are navigating a constant stream of messaging about AI, innovation, and competitiveness. In that environment, it can feel risky to stand still. For many, adopting AI becomes less about enthusiasm and more about staying relevant and responsible as a leader.

When adoption is driven primarily by urgency rather than clarity, implementations can happen quickly and unevenly. Tools get turned on before teams fully understand how they fit into existing workflows or how they will be experienced by candidates. In those cases, technology can introduce new friction instead of removing it.

At the same time, employer trust in AI remains cautious. The majority of employers report neutral feelings toward AI-driven hiring tools, and more than a quarter actively distrust their fairness.

Taken together, the data suggests that employers are using AI because they need help and feel pressure to adapt, not because they are fully confident in the outcomes it produces. AI is being treated as an operational support tool, not yet as a trusted decision partner.

Candidates Are Experiencing AI Very Differently

Candidate trust in AI declined further between survey cycles. In July 2025, 19 percent of candidates said they trusted AI-driven hiring tools to evaluate applications fairly. By January 2026, that number dropped to 14 percent, while overall distrust increased.

Candidates consistently report concerns about transparency, bias, and being filtered out without explanation. For many, AI feels opaque. Applications disappear. Communication slows. Feedback is rare.

From the candidate’s perspective, AI often shows up as silence rather than support.

This is not resistance to technology. Candidates routinely use digital tools to search for jobs, tailor resumes, and manage applications. The issue is not automation itself. It is the lack of clarity around how AI is used and the absence of visible human involvement when decisions feel automated.

As these concerns grow, regulatory attention is increasing as well. Several states and governing bodies are beginning to explore frameworks designed to protect transparency, fairness, and human oversight in AI-driven employment decisions. This reflects a broader recognition that trust must be designed into systems, not assumed after the fact.

The Trust Gap Creates Downstream Consequences

When adoption outpaces trust, friction increases. Employers may gain internal speed, but candidates lose confidence. That loss shows up in disengagement, lower response rates, and higher drop off throughout the hiring process.

The data already reflects this trend. Candidate experience scores declined between July and January, with slow response times becoming the top reported frustration. AI may be helping internal workflows, but from the outside, the process often feels less responsive and less personal.

Trust gaps do not remain isolated. They affect employer brand, offer acceptance, and long-term talent relationships.

A Steadier Path Forward

The data does not suggest that AI should be rolled back. It suggests that AI should be implemented thoughtfully.

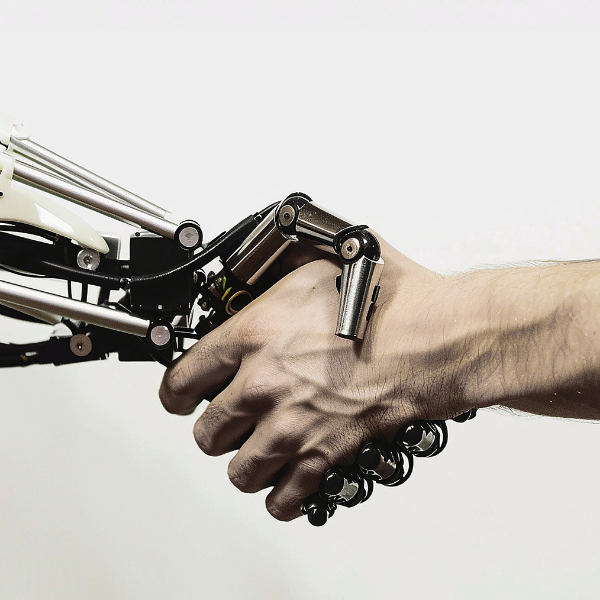

The most effective hiring teams treat AI as an enhancement to human judgment, not a substitute for it. Automation works best when it removes administrative burden, improves responsiveness, and creates space for better conversations.

Candidates still want to know someone is paying attention. Employers still need accountability in decision making. AI can support both goals, but only when it is implemented transparently and paired with clear human oversight.

Technology is not the enemy. Fear-based adoption is the risk.

Organizations that build trust alongside adoption will see compounding returns over time. Those that chase efficiency alone may solve short-term capacity challenges while creating longer-term credibility issues.

Steady leadership in moments of rapid change often looks quieter than the headlines. It focuses less on keeping up and more on getting it right.

By: Adam McCoy